AI is driving up energy bills, so can what digital entrepreneurs do to curb soaring costs, optimize power usage, and boost profits?

In the race to harness artificial intelligence for smarter services and faster growth, one hidden cost is becoming impossible to ignore: AI driving up energy bills. From training massive language models to powering real-time analytics, AI workloads are guzzling electricity at unprecedented rates.

For digital nomads and global entrepreneurs, this translates into soaring operational expenses—especially when you’re running your business from co-working spaces, home offices, or remote retreats where electricity rates can skyrocket. The good news is that with targeted strategies and smart investments, you can rein in those costs without sacrificing your AI-powered competitive edge.

Why “AI driving up energy bills” Should Be on Your Radar

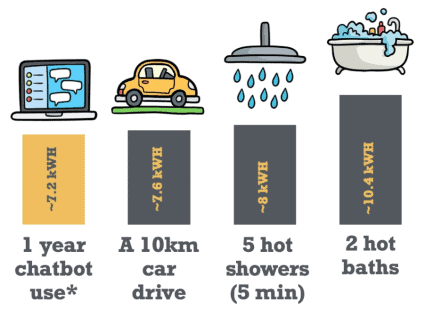

AI workloads are more than just software routines. Training a single large neural network can consume as much energy as hundreds of average homes use in a year. Inference tasks—like serving personalized content 24/7—add another layer of continuous power draw. As data centers expand to accommodate these demands, grid operators are scrambling to maintain stability and pass on higher rates to consumers.

- Data center electricity demand grew by 8% in 2024 and is forecasted to climb another 15% by 2027.

- Edge computing hubs and microdata centers have sprouted in nontraditional locations, often tapping into high-cost local grids.

- Peak charging cycles for GPUs and TPUs can coincide with local demand peaks, triggering dynamic rate spikes for end users.

For digital entrepreneurs, every AI-driven campaign or automated process can inflate your monthly utility bill—unless you take corrective action early.

The Hidden Energy Footprint of AI Workloads

Understanding why AI driving up energy bills is happening requires a quick dive into the architecture of AI systems:

- Model Training

Training large-scale AI models demands thousands of graph-processing units (GPUs) running at full tilt for days or weeks. Each GPU can draw 300–400 watts under load, and clusters can scale into the hundreds. - Real-Time Inference

Once models are live, they serve predictions continuously. Even lightweight recommendation engines contribute to a cumulative energy draw that adds up over time. - Data Storage & Cooling

Storing terabytes of training datasets and model weights in high-availability storage arrays further increases electricity consumption. And cooling these arrays often consumes 30–50% of a data center’s total power budget.

Collectively, these factors explain why AI is one of the fastest-growing slices of global electricity consumption—and why it’s directly linked to the phrase “AI driving up energy bills.”

Impact on Digital Nomads and Global Entrepreneurs

Digital nomads thrive on flexibility, hopping between locations with differing energy costs and grid stability. Meanwhile, global entrepreneurs manage portfolios, remote teams, or digital products across multiple time zones. The rise of AI workloads in their operations can have several direct consequences:

- Unpredictable Utility Bills

Dynamic tariffs and peak-hour surcharges can double or triple charges when AI processes coincide with local grid stress. - Infrastructure Lock-In

Relying on high-performance cloud GPUs ties you to public cloud pricing, where energy-intensive workloads incur steep “compute” and hidden energy premiums. - Environmental Footprint

Investors and clients are increasingly conscious of carbon emissions. Demonstrating sustainable AI practices can become a competitive advantage. - Operational Disruptions

In regions prone to rolling blackouts or grid instability, AI workloads can exacerbate local stress, increasing the risk of downtime for your mission-critical applications.

Recognizing how AI driving up energy bills impacts your bottom line and brand reputation is the first step toward more resilient, cost-effective operations.

Actionable Strategies to Curb AI Energy Costs

You don’t have to choose between AI innovation and runaway bills. Implement these tactics to optimize consumption, stabilize budgets, and future-proof your tech stack:

- Right-Size Your Models

Use model-distillation techniques or parameter pruning to shrink neural nets without major accuracy loss. Leaner models mean less compute time and lower power draw. - Schedule Off-Peak Training

Shift intensive training jobs to off-peak hours in regions where electricity is cheapest. Some cloud providers offer up to 50% discounts during low-demand windows. - Embrace Edge & Hybrid Architectures

Offload inference to on-premise or edge devices running optimized, lightweight models. This reduces latency and shifts power consumption away from centralized data centers. - Invest in Renewable-Powered Cloud

Select cloud regions or providers committed to 100% renewable energy. While per-compute costs may be slightly higher, the long-term savings on carbon levies and ESG benefits pay dividends. - Implement Demand Response Tools

Connect your workload scheduler to demand-response APIs that throttle non-urgent tasks when grid stress spikes, avoiding peak-hour pricing. - Monitor and Alert in Real Time

Deploy energy analytics dashboards that track GPU/TPU utilization and associated kilowatt-hours. Set automated alerts when projected monthly consumption exceeds your budget threshold.

Future-Proofing Your Business with Sustainable AI

As policymakers tighten regulations around carbon emissions and data center subsidies, the cost of energy for AI workloads will only rise. Forward-thinking digital entrepreneurs can get ahead by:

- Building an Energy-Aware Tech Stack

Standardize on tools and libraries designed for efficiency, such as quantization frameworks and serverless inference platforms. - Establishing an AI Energy Audit Process

Quarterly energy audits—mirroring financial reviews—can uncover wasteful processes, outdated models, or inefficient hardware allocations. - Cultivating a Green AI Roadmap

Publish an annual sustainability report that outlines your targets for reducing AI energy consumption and achieving carbon neutrality. - Leveraging Incentives and Grants

Explore government or industry grants aimed at decarbonizing tech operations. Some jurisdictions offer rebates for deploying AI solutions on renewable-powered infrastructure.

By proactively integrating these practices, you transform “AI driving up energy bills” from a looming threat into an opportunity—demonstrating leadership in both innovation and sustainability.

Conclusion

The rapid ascent of AI workloads is reshaping energy markets and putting pressure on power grids worldwide. For digital nomads and global entrepreneurs, unchecked consumption can erode margins, damage reputations, and limit future growth. But by applying targeted optimization techniques—right-sizing models, scheduling off-peak training, investing in renewables, and embracing edge computing—you can tame those bills and unlock the full potential of AI.